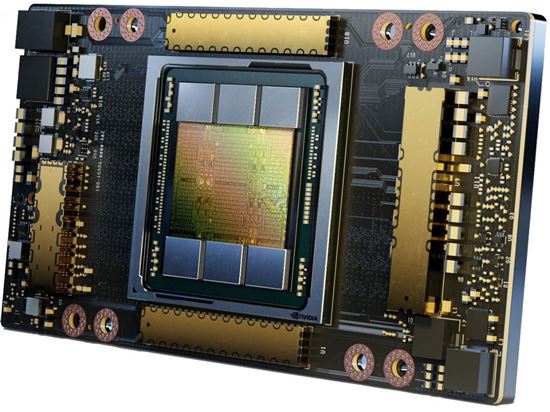

The NVIDIA L4 architecture is a GPU design optimized for AI and machine learning, featuring Ampere architecture, Tensor Cores, and high-bandwidth memory.

In this article, we will explain what NVIDIA L4 is, what its architecture involves, and why it’s important for technology today.

What is Nvidia L4?

Nvidia L4 is a new GPU series designed for high-performance AI inference workloads. Optimized for tasks like natural language processing, computer vision, and autonomous driving, L4 accelerates real-time decision-making and AI computations. It offers superior performance and efficiency for data centers and cloud environments, catering to industries such as healthcare, finance, e-commerce, and cloud services. The architecture is built to handle demanding AI applications, enabling faster predictions and decisions from AI models.

NVIDIA L4 Architecture: What Makes It Special?

NVIDIA L4 architecture is designed to optimize performance for AI workloads, which are becoming more complex as technology advances. To understand this better, let’s break down the components that make up the L4 architecture.

Ampere Architecture:

The NVIDIA L4 GPUs are built on the Ampere architecture, which offers significant advancements over previous generations like Turing and Volta. Ampere is designed to deliver higher performance per watt, making it ideal for power-sensitive environments like data centers. It efficiently handles both traditional graphics tasks and complex AI workloads, such as deep learning and neural networks. The architecture ensures faster AI model execution and enhanced energy efficiency, which is crucial for large-scale AI operations.

Also read: What Is A Video Card – A Simple Guide For Everyone!

CUDA Cores and Tensor Cores:

NVIDIA L4 GPUs use CUDA cores, which enable high-performance parallel processing for AI and computational tasks. CUDA allows developers to offload intensive computations to the GPU, accelerating AI workloads. Additionally, L4 features Tensor Cores, specialized units designed to handle deep learning and neural network tasks. These cores provide faster matrix operations, making L4 GPUs ideal for machine learning applications. Together, CUDA and Tensor Cores offer remarkable speed and efficiency for AI model training and inference.

Multi-Instance GPU (MIG) Technology:

MIG (Multi-Instance GPU) technology allows the NVIDIA L4 GPU to be partitioned into multiple isolated instances, improving GPU utilization. Each instance operates independently, enabling simultaneous processing of multiple AI models without interference. This feature is especially beneficial for data centers where various tasks need to be run concurrently on the same physical GPU. MIG enhances workload flexibility, maximizes efficiency, and ensures that resources are used optimally, which is critical for cloud environments and multi-tasking operations.

High Bandwidth Memory (HBM):

The L4 GPUs are equipped with high-bandwidth memory (HBM), which facilitates faster data transfer between the GPU and its memory. HBM significantly enhances overall GPU performance by reducing bottlenecks in data throughput. This is particularly important when dealing with large datasets, as seen in AI and data science applications. Faster memory speeds enable quicker model training and inference, making L4 GPUs highly effective in scenarios requiring high-speed data processing and large-scale AI computations.

What architecture is the Nvidia 4000 series?

The NVIDIA 4000 series, including models like the RTX 4090 and RTX 4080, is based on the Ada Lovelace architecture. This new architecture provides major improvements in performance, energy efficiency, and AI capabilities compared to previous generations. Ada Lovelace enhances ray tracing, supports DLSS 3 (Deep Learning Super Sampling) for better graphics, and delivers faster frame rates in gaming and creative applications. It’s designed to handle demanding workloads, making it ideal for both gaming and professional tasks.

Benefits of Nvidia L4 GPUs:

- Performance at Scale: The L4’s architecture offers a high level of performance across a variety of AI and machine learning tasks, from natural language processing to autonomous systems. Its parallel processing capabilities ensure it can handle large-scale workloads efficiently.

- Cost-Efficiency: By using Tensor Cores and energy-efficient designs, the L4 helps lower operational costs, making it a more attractive option for businesses looking to deploy large AI models or scale their operations without incurring high energy bills.

- Real-Time Inference: The L4’s design focuses on maximizing throughput while minimizing latency, which is essential for real-time applications like autonomous driving and fraud detection.

- Flexibility: Nvidia L4 GPUs are flexible and can be deployed in a wide range of environments—from on-premise data centers to cloud infrastructures—offering businesses greater control over their computing resources.

Also read: Can I Get A Online Graphics Card – A Simple Guide For Everyone!

How Does the NVIDIA L4 Architecture Compare to Other Architectures?

When comparing the NVIDIA L4 architecture to other GPU architectures like NVIDIA Turing, Volta, or even AMD’s GPUs, it becomes clear that L4 is optimized for AI and machine learning applications. The key differences lie in the number of Tensor Cores and the emphasis on AI-specific features like MIG.

- Turing and Volta: Older architectures such as Turing and Volta were primarily focused on graphics performance, with AI capabilities added as secondary features. These architectures were good for tasks like gaming and video rendering, but they are less efficient for AI workloads.

- AMD GPUs: While AMD also produces high-performance GPUs, NVIDIA’s CUDA and Tensor Cores give NVIDIA an edge when it comes to deep learning and AI. AMD GPUs are catching up, but NVIDIA is still the leader in AI-specific architecture.

The L4 architecture stands out because it is designed to handle the future of AI workloads with a special focus on efficiency and scalability.

Why Is NVIDIA L4 Architecture Important?

NVIDIA L4 architecture is not just another graphics card; it is specifically designed for the future of AI. As artificial intelligence becomes more integrated into everyday life, the demand for powerful and efficient computing systems grows. The L4 architecture is important because it provides the hardware necessary to support these growing demands.

Faster AI Model Training:

Training AI models, particularly deep learning and neural networks, requires significant computational power. The NVIDIA L4 architecture accelerates this process by offering enhanced performance, enabling data scientists and researchers to train AI models more quickly. This reduced training time speeds up the development and testing of AI algorithms, allowing businesses and institutions to bring new innovations to market faster. Faster training also facilitates more iterations, improving the accuracy and robustness of AI models over time.

Energy Efficiency:

AI workloads consume a considerable amount of energy, making power efficiency crucial. The NVIDIA L4 architecture is designed to minimize energy consumption without sacrificing performance, offering high computational power with lower power requirements. This energy efficiency is vital for companies and research institutions looking to balance performance with sustainability goals. By reducing operational costs related to energy usage, the L4 architecture helps organizations run AI models more cost-effectively and reduce their environmental impact.

Scalability:

The NVIDIA L4 architecture is highly scalable, making it adaptable to a range of AI workloads. It can handle both small-scale AI tasks and large-scale deployments across data centers. Whether deployed on a single machine or across thousands of servers, the L4 architecture provides the necessary power and flexibility. This scalability is essential for businesses and research environments that need to expand their AI capabilities, allowing them to scale operations as demands grow without compromising performance.

Also read: Under What Name Blackwell Gpu – A Simple Guide!

Versatility for Different Industries:

The NVIDIA L4 architecture is designed for versatility, supporting a wide range of industries beyond gaming. In healthcare, it can analyze medical images and genetic data. For autonomous vehicles, L4 powers real-time decision-making in self-driving cars. In robotics, it enables machines to learn and adapt to new environments, performing tasks like sorting or delivery. In finance, L4 GPUs speed up tasks such as fraud detection, risk analysis, and algorithmic trading, helping various sectors leverage AI’s full potential.

FAQ’s

1. What is NVIDIA L4 architecture?

The NVIDIA L4 architecture is a GPU design for AI, machine learning, and high-performance computing. It provides enhanced speed, efficiency, and power for complex tasks.

2. Why is NVIDIA L4 architecture important?

It allows faster processing of AI models, reduces energy use, and increases scalability, making it ideal for industries like healthcare, finance, and robotics.

3. What is the main difference between L4 and older NVIDIA architectures?

L4 is optimized for AI and machine learning, while older architectures like Turing and Volta focus more on graphics performance.

4. What are Tensor Cores in NVIDIA L4?

Tensor Cores are special processing units in L4 GPUs designed to accelerate AI and deep learning tasks, making AI models run faster.

5. Can NVIDIA L4 be used for gaming?

While it can handle gaming tasks, the L4 is mainly designed for AI and machine learning, offering better performance for those applications than for traditional gaming.

Conclusion

The NVIDIA L4 architecture is a high-performance GPU tailored for AI and machine learning. Equipped with Tensor Cores and high-bandwidth memory, it offers optimized performance and energy efficiency. Its scalability and versatility make the L4 vital for industries such as healthcare, robotics, and finance, meeting the increasing demands of AI workloads and accelerating innovation across sectors.