To check GPU availability in PyTorch, use torch.cuda.device_count(). It returns the number of GPUs PyTorch can access for faster computations.

This article will guide you through the process of checking the number of GPUs available in PyTorch.

Why is it Important to Check GPU Availability in PyTorch?

Before running heavy computations such as training deep learning models, it’s crucial to ensure that PyTorch can access your system’s GPU. Leveraging GPU resources is vital as it can dramatically speed up the training and testing process of machine learning models, offering a significant performance boost compared to relying solely on a CPU. If you have multiple GPUs, PyTorch can also distribute the computations across them, further accelerating the model training process.

Benefits of Using a GPU:

- Faster Computation:GPUs are optimized for parallel processing, allowing them to handle tasks like matrix multiplication and deep learning operations much more efficiently than CPUs. This parallelization results in significantly faster computation, especially for complex models and large datasets.

- Larger Models: The memory capacity and computational power of GPUs enable the training of larger models with more parameters. This is particularly beneficial when dealing with complex deep learning architectures that might be too resource-intensive to train on a CPU.

- Efficiency: Using a GPU ensures faster model training times, which means less waiting for model convergence and quicker iterations in research or development workflows. It also leads to better resource utilization by offloading computation-heavy tasks from the CPU.

Steps to Check GPU Availability in PyTorch:

Install PyTorch with GPU Support:

To use GPUs in PyTorch, you first need to install the version that supports GPU acceleration. Make sure to install PyTorch with CUDA, which allows PyTorch to access your GPU. You can install it using a package manager like pip. The installation command should match your CUDA version to ensure compatibility. Installing the correct version ensures that you can fully utilize GPU resources for faster model training and computations.

Import PyTorch:

After installing PyTorch, the next step is to import it into your Python script or Jupyter notebook. By doing this, you enable PyTorch functions, including those related to GPU management. Importing PyTorch is simple: just add the import torch at the beginning of your code. Once PyTorch is imported, you can start checking for GPU availability and managing computations on your CPU or GPU with the powerful features PyTorch offers.

Check if CUDA is Available:

Before using the GPU, you need to check if CUDA (NVIDIA’s platform for GPU computing) is available on your system. PyTorch uses CUDA to interact with GPUs, so this step is essential. You can check this by running torch.cuda.is_available(). If the result is True, CUDA is ready to use the GPU. If False, either your system doesn’t have an NVIDIA GPU or CUDA is not properly installed or configured.

Also read: How Popular Is Gpu Mining In India – A Complete Guide!

Check the Number of GPUs:

Once CUDA is available, you can check how many GPUs are present on your system. PyTorch allows you to see how many GPUs it can utilize for parallel processing. This is done using the command torch.cuda.device_count(). If your system has multiple GPUs, PyTorch can distribute work across them, which speeds up tasks like model training. Knowing the number of GPUs helps you optimize resource usage and training efficiency.

Get GPU Information:

You can gather additional details about your GPU, such as its name, memory usage, and total memory capacity. To get the GPU name, use torch.cuda.get_device_name(). This helps identify the GPU model. You can also check how much memory is allocated on the GPU and the total memory available, using torch.cuda.memory_allocated() and torch.cuda.memory_reserved(). This information helps you manage memory efficiently and monitor GPU utilization during model training.

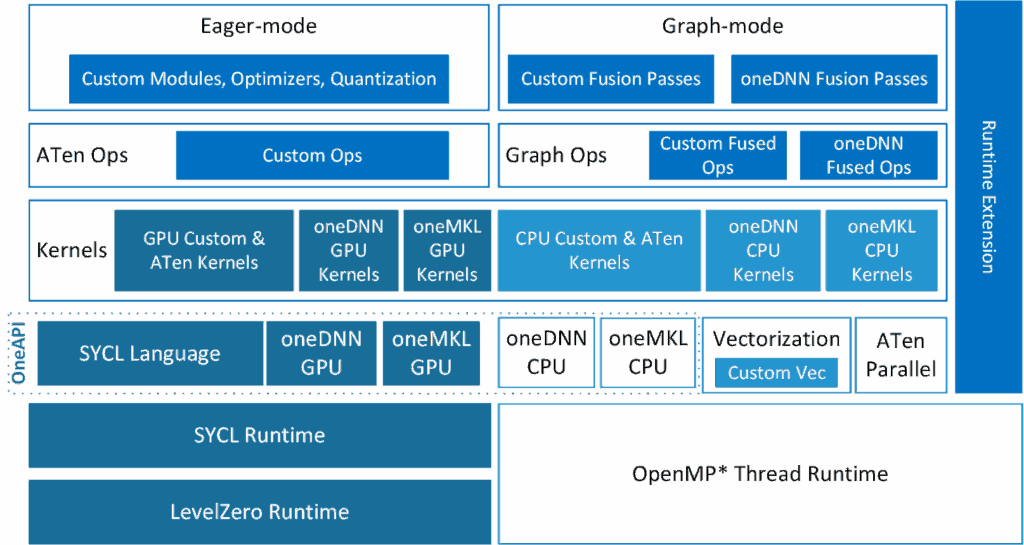

Use GPUs for PyTorch Operations:

To use a GPU in PyTorch, you need to move your data or model to the GPU. This is done by transferring the tensor or model to the device using .to(device) or .cuda(). First, you check if CUDA is available, and then you can send your data to the GPU. If CUDA is not available, PyTorch will fall back to using the CPU. This allows for faster processing when a GPU is available.

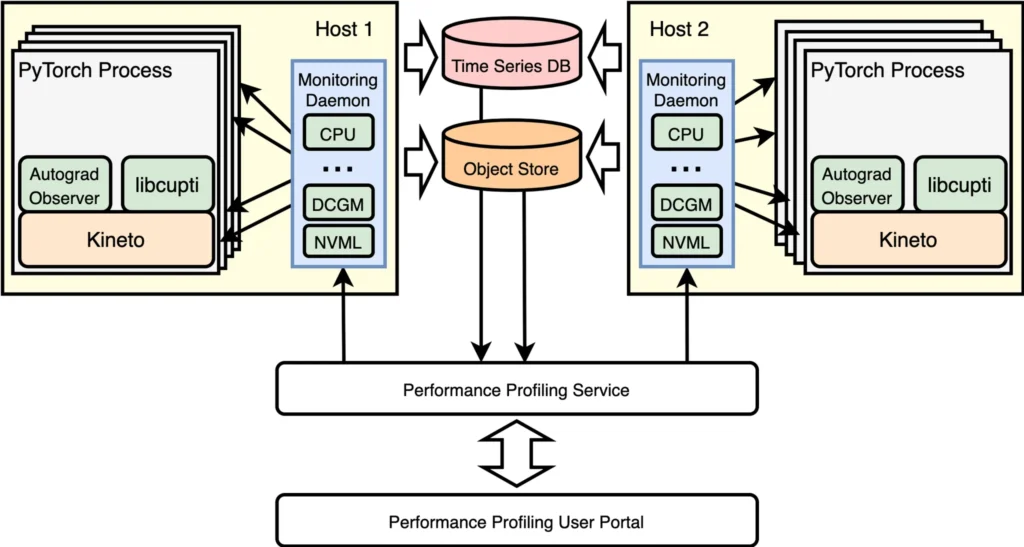

Managing Multiple GPUs in PyTorch:

If you have multiple GPUs, you can assign specific tasks or models to different GPUs. PyTorch provides ways to handle multiple GPUs through methods like torch.nn.DataParallel, which automatically splits the data and runs the model across several GPUs. You can also manually assign a model to a specific GPU using .to(device). This helps you leverage all available GPUs to speed up computation, especially when training large models with big datasets.

Troubleshooting GPU Issues:

Sometimes, you may encounter issues when working with GPUs in PyTorch. Here are some common problems and their solutions:

- CUDA Out of Memory Error: If you run out of GPU memory, reduce the batch size or use torch.cuda.empty_cache() to free up memory. This can help avoid memory overflow.

- Driver Issues: Ensure that the correct NVIDIA drivers are installed, and check that CUDA is properly configured. Driver issues can prevent PyTorch from accessing the GPU for computations.

- PyTorch Version Compatibility: Verify that your PyTorch version matches the installed CUDA version. Incompatible versions can cause errors. Check the PyTorch website for a compatibility chart to ensure proper setup.

How to check GPU availability in Python?

To check if a GPU is available in Python, you can use the PyTorch library. First, ensure that PyTorch is installed on your system. Then, you can verify GPU availability by checking if CUDA (the platform for running computations on NVIDIA GPUs) is accessible. If CUDA is available, Python can use the GPU for computations, which speeds up tasks like model training. If CUDA is not available, the system will use the CPU instead.

FAQ’s

1. How do I check the number of available GPUs in PyTorch?

To check the number of available GPUs, use the torch.cuda.device_count() function. If the result is 0, no GPU is detected, meaning only CPU will be used.

2. How can I check if my GPU is available in PyTorch?

Use torch.cuda.is_available() to check if your GPU is available. If it returns True, your GPU can be used; if False, no GPU is accessible.

3. What should I do if no GPU is detected by PyTorch?

Ensure your system has an NVIDIA GPU, that CUDA is properly installed, and that your PyTorch version matches your CUDA version to allow GPU usage.

4. How can I get more information about my GPU in PyTorch?

You can find detailed information about your GPU, such as its name and memory usage, by using specific PyTorch functions designed for querying GPU properties.

5. How do I use multiple GPUs in PyTorch?

To use multiple GPUs, PyTorch provides a method to automatically split tasks across GPUs. You can also manually assign different tasks to specific GPUs for better performance.

Conclusion

In conclusion, checking the number of available GPUs in PyTorch is crucial for enhancing performance in deep learning tasks. By using functions like torch.cuda.device_count(), you can easily determine GPU availability. Properly utilizing GPUs leads to faster computations, better resource management, and more efficient model training, significantly speeding up the process and improving overall productivity.